Currently all systems try to be real time systems, or at least soft-real-time systems. In order to get it, most of the systems abuse of

pulling

methodology. This approach provokes a lot of

useless requests that cause overloading. Why are they useless? Because this approach tries to catch the changes requesting and requesting over and over, but it will always have a delay. The new data has to be there when the request is done.

Nowadays we have languages like

Erlang

or

Elixir

(I am very excited with Elixir and you will see some posts in the future) or frameworks like

Akka

. But what can we do when we have a lot of complex algorithms written in a language like

Python

or your team does not know Erlang?

For this reason I decided to build a proof of concept of a reactive architecture using Python . The PoC will be a system which will track the used CPU of a machine, although it is (almost) prepared for measuring the used resources of a lot of machines.

For this reason I decided to build a proof of concept of a reactive architecture using Python . The PoC will be a system which will track the used CPU of a machine, although it is (almost) prepared for measuring the used resources of a lot of machines.

In order to build a complete system I have chosen

Tornado Web Framework

,

RabbitMQ

and

AngularJS

to build the different modules.

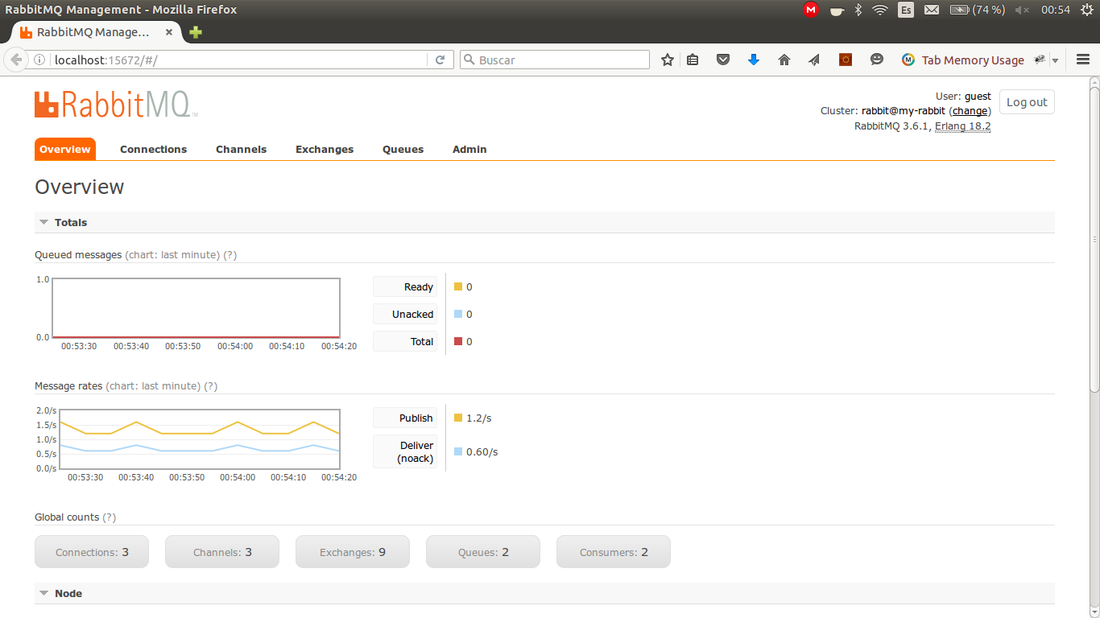

The first module, resources-tracker, will be on charge of measuring the CPU and sending through the RabbitMQ broker using a topic exchange . The topic will be <name-of-the-machine>.measure.

The first module, resources-tracker, will be on charge of measuring the CPU and sending through the RabbitMQ broker using a topic exchange . The topic will be <name-of-the-machine>.measure.

The next module, resources-analyzer , will receive all the measures (topic: #.measure ), will determine the range of use of the CPU of the machine ( HIGH, MEDIUM, LOW) and will send it through the RabbitMQ broker using the topic <machine's-name-of-received-measure>.analysis .

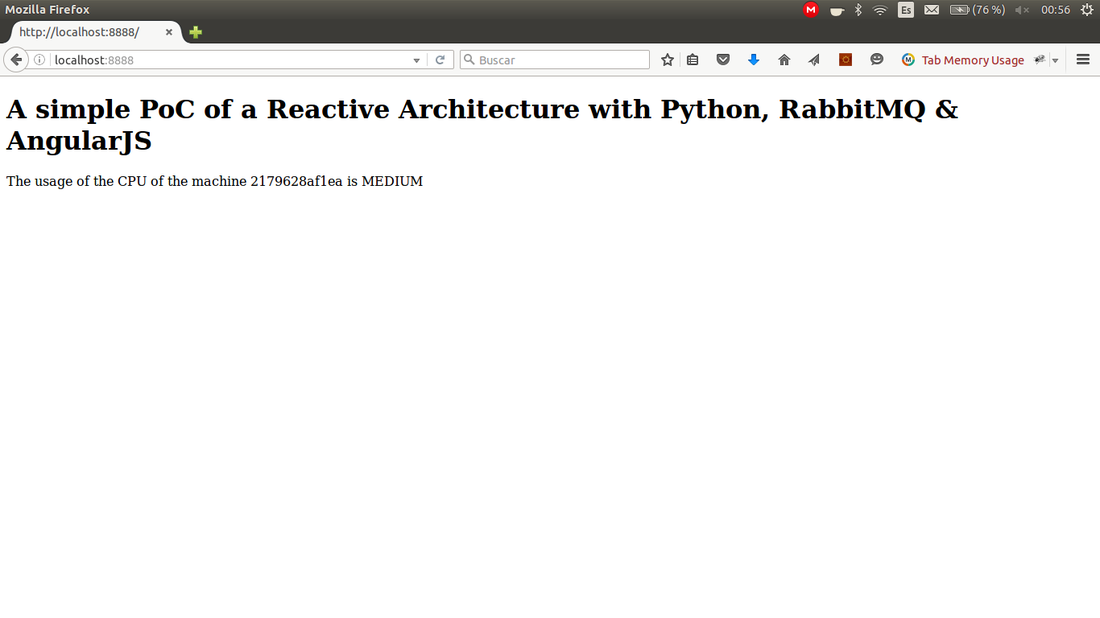

The last module, resources-web , will show the results of the module before. For this task Tornado web framework which will be connected to RabbitMQ using the TornadoConnection of Pika and to the simple AngularJS client through a WebSocket .

Therefore, each time a CPU measure is taken, the final result will be represented in a browser without making any request.

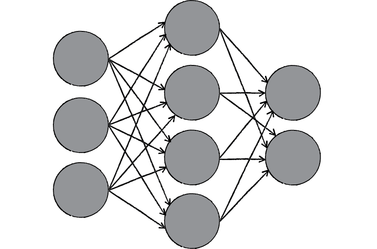

This approach makes the system very scalable. Everything can be build as a module listening to the right topics and sending the result using another topic, in order to be used by other modules. The final picture can remind us a neural network.

All the code can be found in github . Each module contains a Dockerfile and it will help you a lot to run the entire system using Docker .